Tackling Lambda challenges #1: flaky latency

Serverless development is more popular than ever. No servers to maintain, out-of-the-box scalability and pay-as-you-go pricing make it an attractive platform for developers, but these advantages come with a unique set of challenges. For instance, the response time for otherwise identical requests seems to fluctuate, structuring projects is not a straightforward exercise and local development is perceived as challenging. Luckily these challenges are anything but insurmountable, so let’s tackle them together. This post will be the first in a series that zooms in on challenges a developer may face. This is by no means an exhaustive list, but these are the ones that developers encounter rather quickly. We will start with inconsistent response times, also called flaky latency.

Flaky latency

Raise your hand if you have analysed the response times of your Lambda functions and found inconsistencies. Whether and how high you have just raised your hand will depend on a number of factors. Regardless, most serverless developers will at some point encounter flaky latency and observe that some responses take significantly longer to arrive than others with otherwise seemingly identical conditions. We will analyse why this happens by taking a look at what Lambda is and then doing a deep-dive on the lifecycle of Lambda functions. We will explore what drives fluctuations in response times and what our options are to tackle them.

Serverless compute on AWS Lambda

Lambda was released in 2014 and has since popularized serverless compute. With Lambda developers only have to provide their code and the function configuration, being no longer burdened by any traditional server operating tasks. Lambda functions are event-driven and stateless, meaning that they react on events and that you cannot rely on them to carry over any state between processing each event. If multiple events come in at the same time Lambda will spin up more concurrent instances. This makes functions inherently scalable, to the point of being a possible burden on anything non-serverless and simply-not-that-scalable around them. This situation is often avoided by working with serverless services only, with developers opting to use S3 and DynamoDB with Lambda functions. The code that developers write must contain a predefined entry-point called the handler. You could see it as the body of the function where business logic goes. The existence of a handler and what goes outside will become relevant just a bit later in this post.

def handler(event: Dict[str, Any], context: LambdaContext) -> str:

# Business logic goes here

return "Hello World"In true serverless fashion Lambda does not expose much configuration. Imagine a knob that you can move, this knob determines how much memory your function will receive. As you add more memory, more CPU is added proportionally. Exact proportions or hard guarantees about how powerful the CPU is are not given. This knob is the first and most important tool in the toolbox for tweaking performance and cost.

The Lambda function lifecycle

Imagine a restaurant room without tables and chairs. As guests come in waiters have to get and prepare a table for each guest. As the table is prepared guests have to wait before starting their meal. Once guests are finished the tables are not removed but are kept on the floor for a few hours. After all, if a table is empty and available a guest can simply sit down and eat without having to wait. This analogy is not very realistic, but it helps when thinking about the function lifecycle. A Lambda function in this analogy is the blueprint or instruction of the table with the waiters (configuration) and the meal (code). Whenever you deploy a function, you create this blueprint. As guests (events) come in your blueprint is used to set up the table (function instances) which is then used to consume the meal (run your code). We will stop with the analogy in favour of a technical deep-dive, but whenever you are confused about Lambda remember the unfortunate waiters and their restaurant tables.

Lifecycle deep-dive

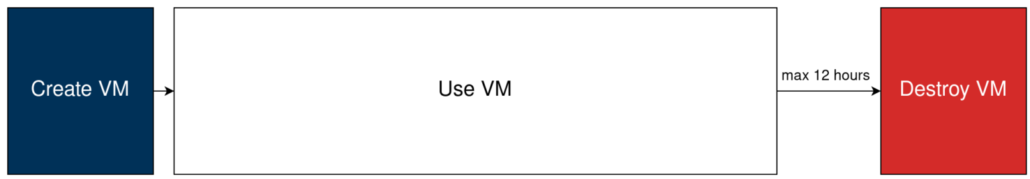

The lifecycle revolves around the creation of function instances: so-called Execution Environments. In the past we had little information about how Execution Environments were created and what they looked like exactly, so attempts at reverse-engineering were made. Much has changed since then and AWS has even published a paper that goes into detail about the Lambda backend, so we can paint quite an accurate picture of what is going on. It appears that since 2018 function instances are technically micro-VMs, very small and fast-to-boot virtual machines. Virtual machines were chosen for security considerations as they provide a high-level of isolation, but they do so at the cost of longer startup times. To minimize the impact of startup times Lambda will reuse VMs that are active but not processing any event. The VMs will be kept alive for up to 12 hours and then destroyed.

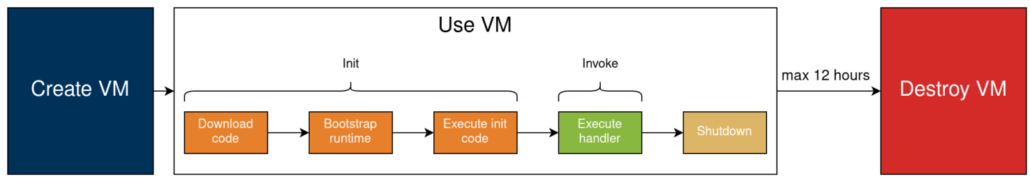

If we zoom in a little more we will find that the environment inside the VM goes through a number of stages on creation. First is the initialization stage, where code is downloaded, the runtime is bootstrapped and part of your code is executed. The code referred to here is static code, meaning all the code outside the Lambda handler. Next up is the execution stage where unsurprisingly the entrypoint of your function, the handler, is executed. This is the part of the function that contains your application logic. Once all code has been executed the VM shuts down, but remains for use with other events. On subsequent invocations, if this VM is not processing any other events it will be re-used and all stages up to Invoke will be skipped.

Flaky latency explained

Now that we understand the Lambda lifecycle, let’s get down to the issue at hand. Sometimes functions take longer to respond. This is called a cold start because the underlying issue is that no VM is available, so a new one has to be created from scratch. Not only do you incur a penalty for the creation of a VM, but you also have to wait for your code to be downloaded and for the runtime to be bootstrapped. Depending on the language you choose to write the function in this cold start will be more or maybe less pronounced, but it will be there. Looking at the function lifecycle we can explain why the choice of language matters. As the code package grows larger the download stage takes longer. When your runtime requires a slow-to-start virtual machine (looking at you there Java) the runtime bootstrap will take a while longer. Finally, it matters how fast your code executes, since the code that is not in your handler is executed during the initialization stage. All these factors together add up to what you experience as flaky latency.

Tackling the issue

In general, we have three options to tackle flaky latency: we can optimize our code to start faster, we can simply not wait for a response, or we can configure Lambda to keep a number of VMs available at all times.

Optimization

Outside of picking a faster-to-start language for development, any improvements in this area would need to happen during the initialization stage. Thus know that our options include reducing the size of our code package, optimising the runtime bootstrap or making code outside our handler do less. Including fewer dependencies is a popular method to reduce the total size of our code package. Optimising the runtime bootstrap is a valid strategy for languages such as Java, where it is possible to tune the JVM to start faster. Optimization of code outside the handler is a valid strategy that can make a difference in any language. One way to do that is to limit the amount of auto-configuration that happens in your code. For example, AWS client libraries search for authentication tokens in multiple locations and try to figure out the region your service is in. Providing this information beforehand limits the time these clients require.

Asynchronous invocation

It is possible to circumvent the whole issue of waiting on inconsistent response times by, well, not waiting. We are talking about asynchronous invocation here, where the function is triggered but the response is not awaited. Cold starts become less relevant here, and it becomes possible to focus on boosting developer productivity with frameworks and auto-configuration even at the cost of performance. This approach is not always feasible and may require the developer to re-architect the service, but is otherwise a convenient way to not think about the performance of your functions at all.

Provisioned concurrency

If enough VMs are available for your Lambda to process incoming events, then no cold-starts occur. Developers can use this observation to create a pattern where they keep their Lambda functions warm by sending a number of simultaneous events once in a while. This is basically a form of provisioning compute capacity, where you guess how many simultaneous events you provision for. Since December 2019 developers no longer have to provision capacity themselves. Provisioned Concurrency has made it possible to pay to keep a number of instances of a Lambda function available at all times. Note that in both cases this is a mitigation strategy which works flawlessly until you are hit with more traffic than you provisioned for. By paying more for idle compute you can gain more predictable performance, at least up to a point.

Concluding remarks

Most serverless developers will stumble upon flaky latency at some point in time. While it may seem mysterious at first, it really isn’t. AWS has to balance predictable performance with cost incurred due to available yet idle compute. If VMs were available for you at all times the service would be called EC2. Instead, VMs are provisioned as-needed and kept idle for an undefined period of time to mitigate the cold-start penalty. Knowing this we can choose to optimize our code, avoid this situation with asynchronous invocations or reach for provisioned concurrency. The need for optimizations may slowly fade as AWS optimizes their Lambda platform, but unless compute capacity will be available for you 24×7 the cold-start penalty will keep existing to some degree. Meanwhile, we have our own knob on a slider that we can move that goes from consistent response times to developer productivity. If we commit to consistent performance we will inevitably give up some comfort in development and sacrifice productivity. As we choose to include frameworks and other conveniences that boost our productivity we inevitably sacrifice some consistency. Assess you environment and set your knob to a position that works for you.