Empowering .NET Developers with Unified AI Integration

Microsoft recently (October 2024) introduced an additional extension to the .NET ecosystem called Microsoft.Extensions.AI, this library is now available in preview. This new addition aims to revolutionize how developers integrate Generative AI capabilities into their applications, offering unified interfaces like IChatClient. Let’s explore why this library is a game-changer and how it can benefit developers.

Why Use Microsoft.Extensions.AI?

Unified API Abstraction

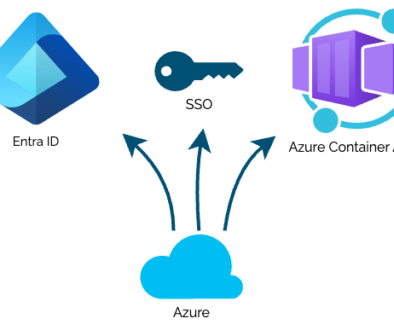

The API provided by Microsoft.Extensions.AI enables .NET applications to integrate AI services in a unified way. This unified approach simplifies the process of working with various AI providers, allowing developers to focus on building features rather than managing multiple APIs. Providers supported by the library may be cloud-based but also running locally on your machine, at his moment the providers OpenAI, Azure Inference Services and Ollama are supported by the library.

Flexibility and Portability

Because of the unified API, the library enables a flexible way for extending your application with AI. This flexibility allows for easy experimentation with different AI services while maintaining a single API throughout the application. As both cloud-based and local running providers are supported, experimenting and optimizing your solution locally before scaling up to a more powerful cloud-based provider is possible without any change in your code.

Enhanced Componentization

Testing your applications and adding new capabilities is facilitated in an easy way when using Microsoft.Extensions.AI. The modular approach leads to more maintainable and scalable code and enables the integration of new features while streamlining the process of breaking applications into components and testing them.

Middleware Support

The library includes middleware for adding key capabilities such as caching, telemetry, and tool calling. These standard implementations work with any provider, reducing the need for custom solutions. In this article we will skip this middleware support, but it’s good to be aware of it because of the possibilities it offers when developing AI agents for instance.

Getting started with local development

To quickly get started with generative AI, we can use local LLMs which we host using Ollama. Ollama is a tool that exposes (opensource) LLMs on your local development environment via an HTTP connection. As mentioned earlier, Ollama is also supported by Microsoft.Extensions.AI. The advantage is that we can use the same interface locally through the library, and leave it unchanged (apart from configuration) when switching to an online LLM service.

Install Ollama

For local development the most convenient way is using Ollama. Download the installer from ollama.com/download and after install start a command prompt (or shell on MacOS/Linux) and download the LLM phi3.5:

We are using the phi3.5 model here, as it is a light model for local usage but performs very well.

Once installed, Ollama runs in the background and besides the command prompt will also be available via an http endpoint. That endpoint will be our entry from the .NET code.

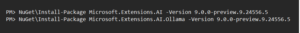

Add extension packages to your project

To get started create a console project with the default settings. In your coding environment add the AI extension package to your project. To use Ollama we also need the Ollama extension with Ollama support as an extra package. The example below uses the package manager console in Visual Studio:

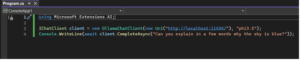

Now replace the contents of program.cs with the next source code:

These few lines create a client that uses your localhost for access to the LLM served by Ollama.

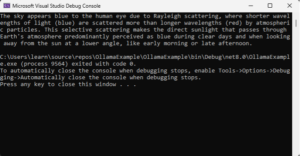

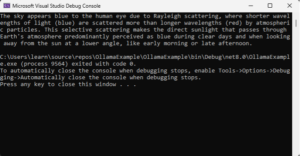

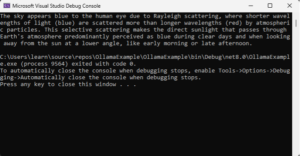

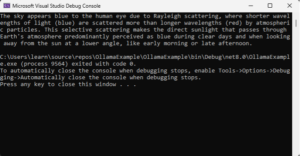

Ollama typically listens at http://localhost:11434 and we specify the use of model phi3.5 that we pulled earlier. The output of the program will look like:

In this simple setup our client requested a question, and received the answer in the response. This is just a starting point, you may extend the question with prompt and add some chat history when going for a more chat-like implementation with recurring interaction.

You may also start experimenting with other models, ollama.com/library lists all available models and their specific use cases. Be aware that the larger the model is, the more amount of RAM will be used on your development machine. A good rule of the thumb is that you need twice the size of the model + 500MB available as RAM.

Conclusion

Setting up a project that uses Generative AI locally is easy using the new Microsoft.Extensions.AI packages. This blog just gave an example of a very simple communication, but if you dive in the Microsoft Learn pages and read more about this extension package you will soon explore more possible use cases.

And there is much more: by using the Microsoft libraries AutoGen and Semantic Kernel the possibilities seem endless when you start with Multi Agent Systems (Agentic AI) but that’s for another series of blogs…

Empowering .NET Developers with Unified AI Integration

Microsoft recently (October 2024) introduced an additional extension to the .NET ecosystem called Microsoft.Extensions.AI, this library is now available in preview. This new addition aims to revolutionize how developers integrate Generative AI capabilities into their applications, offering unified interfaces like IChatClient. Let’s explore why this library is a game-changer and how it can benefit developers.

Why Use Microsoft.Extensions.AI?

Unified API Abstraction

The API provided by Microsoft.Extensions.AI enables .NET applications to integrate AI services in a unified way. This unified approach simplifies the process of working with various AI providers, allowing developers to focus on building features rather than managing multiple APIs. Providers supported by the library may be cloud-based but also running locally on your machine, at his moment the providers OpenAI, Azure Inference Services and Ollama are supported by the library.

Flexibility and Portability

Because of the unified API, the library enables a flexible way for extending your application with AI. This flexibility allows for easy experimentation with different AI services while maintaining a single API throughout the application. As both cloud-based and local running providers are supported, experimenting and optimizing your solution locally before scaling up to a more powerful cloud-based provider is possible without any change in your code.

Enhanced Componentization

Testing your applications and adding new capabilities is facilitated in an easy way when using Microsoft.Extensions.AI. The modular approach leads to more maintainable and scalable code and enables the integration of new features while streamlining the process of breaking applications into components and testing them.

Middleware Support

The library includes middleware for adding key capabilities such as caching, telemetry, and tool calling. These standard implementations work with any provider, reducing the need for custom solutions. In this article we will skip this middleware support, but it’s good to be aware of it because of the possibilities it offers when developing AI agents for instance.

Getting started with local development

To quickly get started with generative AI, we can use local LLMs which we host using Ollama. Ollama is a tool that exposes (opensource) LLMs on your local development environment via an HTTP connection. As mentioned earlier, Ollama is also supported by Microsoft.Extensions.AI. The advantage is that we can use the same interface locally through the library, and leave it unchanged (apart from configuration) when switching to an online LLM service.

Install Ollama

For local development the most convenient way is using Ollama. Download the installer from ollama.com/download and after install start a command prompt (or shell on MacOS/Linux) and download the LLM phi3.5:

We are using the phi3.5 model here, as it is a light model for local usage but performs very well.

Once installed, Ollama runs in the background and besides the command prompt will also be available via an http endpoint. That endpoint will be our entry from the .NET code.

Add extension packages to your project

To get started create a console project with the default settings. In your coding environment add the AI extension package to your project. To use Ollama we also need the Ollama extension with Ollama support as an extra package. The example below uses the package manager console in Visual Studio:

Now replace the contents of program.cs with the next source code:

These few lines create a client that uses your localhost for access to the LLM served by Ollama.

Ollama typically listens at http://localhost:11434 and we specify the use of model phi3.5 that we pulled earlier. The output of the program will look like:

In this simple setup our client requested a question, and received the answer in the response. This is just a starting point, you may extend the question with prompt and add some chat history when going for a more chat-like implementation with recurring interaction.

You may also start experimenting with other models, ollama.com/library lists all available models and their specific use cases. Be aware that the larger the model is, the more amount of RAM will be used on your development machine. A good rule of the thumb is that you need twice the size of the model + 500MB available as RAM.

Conclusion

Setting up a project that uses Generative AI locally is easy using the new Microsoft.Extensions.AI packages. This blog just gave an example of a very simple communication, but if you dive in the Microsoft Learn pages and read more about this extension package you will soon explore more possible use cases.

And there is much more: by using the Microsoft libraries AutoGen and Semantic Kernel the possibilities seem endless when you start with Multi Agent Systems (Agentic AI) but that’s for another series of blogs…

Empowering .NET Developers with Unified AI Integration

Microsoft recently (October 2024) introduced an additional extension to the .NET ecosystem called Microsoft.Extensions.AI, this library is now available in preview. This new addition aims to revolutionize how developers integrate Generative AI capabilities into their applications, offering unified interfaces like IChatClient. Let’s explore why this library is a game-changer and how it can benefit developers.

Why Use Microsoft.Extensions.AI?

Unified API Abstraction

The API provided by Microsoft.Extensions.AI enables .NET applications to integrate AI services in a unified way. This unified approach simplifies the process of working with various AI providers, allowing developers to focus on building features rather than managing multiple APIs. Providers supported by the library may be cloud-based but also running locally on your machine, at his moment the providers OpenAI, Azure Inference Services and Ollama are supported by the library.

Flexibility and Portability

Because of the unified API, the library enables a flexible way for extending your application with AI. This flexibility allows for easy experimentation with different AI services while maintaining a single API throughout the application. As both cloud-based and local running providers are supported, experimenting and optimizing your solution locally before scaling up to a more powerful cloud-based provider is possible without any change in your code.

Enhanced Componentization

Testing your applications and adding new capabilities is facilitated in an easy way when using Microsoft.Extensions.AI. The modular approach leads to more maintainable and scalable code and enables the integration of new features while streamlining the process of breaking applications into components and testing them.

Middleware Support

The library includes middleware for adding key capabilities such as caching, telemetry, and tool calling. These standard implementations work with any provider, reducing the need for custom solutions. In this article we will skip this middleware support, but it’s good to be aware of it because of the possibilities it offers when developing AI agents for instance.

Getting started with local development

To quickly get started with generative AI, we can use local LLMs which we host using Ollama. Ollama is a tool that exposes (opensource) LLMs on your local development environment via an HTTP connection. As mentioned earlier, Ollama is also supported by Microsoft.Extensions.AI. The advantage is that we can use the same interface locally through the library, and leave it unchanged (apart from configuration) when switching to an online LLM service.

Install Ollama

For local development the most convenient way is using Ollama. Download the installer from ollama.com/download and after install start a command prompt (or shell on MacOS/Linux) and download the LLM phi3.5:

We are using the phi3.5 model here, as it is a light model for local usage but performs very well.

Once installed, Ollama runs in the background and besides the command prompt will also be available via an http endpoint. That endpoint will be our entry from the .NET code.

Add extension packages to your project

To get started create a console project with the default settings. In your coding environment add the AI extension package to your project. To use Ollama we also need the Ollama extension with Ollama support as an extra package. The example below uses the package manager console in Visual Studio:

Now replace the contents of program.cs with the next source code:

These few lines create a client that uses your localhost for access to the LLM served by Ollama.

Ollama typically listens at http://localhost:11434 and we specify the use of model phi3.5 that we pulled earlier. The output of the program will look like:

In this simple setup our client requested a question, and received the answer in the response. This is just a starting point, you may extend the question with prompt and add some chat history when going for a more chat-like implementation with recurring interaction.

You may also start experimenting with other models, ollama.com/library lists all available models and their specific use cases. Be aware that the larger the model is, the more amount of RAM will be used on your development machine. A good rule of the thumb is that you need twice the size of the model + 500MB available as RAM.

Conclusion

Setting up a project that uses Generative AI locally is easy using the new Microsoft.Extensions.AI packages. This blog just gave an example of a very simple communication, but if you dive in the Microsoft Learn pages and read more about this extension package you will soon explore more possible use cases.

And there is much more: by using the Microsoft libraries AutoGen and Semantic Kernel the possibilities seem endless when you start with Multi Agent Systems (Agentic AI) but that’s for another series of blogs…

Empowering .NET Developers with Unified AI Integration

Microsoft recently (October 2024) introduced an additional extension to the .NET ecosystem called Microsoft.Extensions.AI, this library is now available in preview. This new addition aims to revolutionize how developers integrate Generative AI capabilities into their applications, offering unified interfaces like IChatClient. Let’s explore why this library is a game-changer and how it can benefit developers.

Why Use Microsoft.Extensions.AI?

Unified API Abstraction

The API provided by Microsoft.Extensions.AI enables .NET applications to integrate AI services in a unified way. This unified approach simplifies the process of working with various AI providers, allowing developers to focus on building features rather than managing multiple APIs. Providers supported by the library may be cloud-based but also running locally on your machine, at his moment the providers OpenAI, Azure Inference Services and Ollama are supported by the library.

Flexibility and Portability

Because of the unified API, the library enables a flexible way for extending your application with AI. This flexibility allows for easy experimentation with different AI services while maintaining a single API throughout the application. As both cloud-based and local running providers are supported, experimenting and optimizing your solution locally before scaling up to a more powerful cloud-based provider is possible without any change in your code.

Enhanced Componentization

Testing your applications and adding new capabilities is facilitated in an easy way when using Microsoft.Extensions.AI. The modular approach leads to more maintainable and scalable code and enables the integration of new features while streamlining the process of breaking applications into components and testing them.

Middleware Support

The library includes middleware for adding key capabilities such as caching, telemetry, and tool calling. These standard implementations work with any provider, reducing the need for custom solutions. In this article we will skip this middleware support, but it’s good to be aware of it because of the possibilities it offers when developing AI agents for instance.

Getting started with local development

To quickly get started with generative AI, we can use local LLMs which we host using Ollama. Ollama is a tool that exposes (opensource) LLMs on your local development environment via an HTTP connection. As mentioned earlier, Ollama is also supported by Microsoft.Extensions.AI. The advantage is that we can use the same interface locally through the library, and leave it unchanged (apart from configuration) when switching to an online LLM service.

Install Ollama

For local development the most convenient way is using Ollama. Download the installer from ollama.com/download and after install start a command prompt (or shell on MacOS/Linux) and download the LLM phi3.5:

We are using the phi3.5 model here, as it is a light model for local usage but performs very well.

Once installed, Ollama runs in the background and besides the command prompt will also be available via an http endpoint. That endpoint will be our entry from the .NET code.

Add extension packages to your project

To get started create a console project with the default settings. In your coding environment add the AI extension package to your project. To use Ollama we also need the Ollama extension with Ollama support as an extra package. The example below uses the package manager console in Visual Studio:

Now replace the contents of program.cs with the next source code:

These few lines create a client that uses your localhost for access to the LLM served by Ollama.

Ollama typically listens at http://localhost:11434 and we specify the use of model phi3.5 that we pulled earlier. The output of the program will look like:

In this simple setup our client requested a question, and received the answer in the response. This is just a starting point, you may extend the question with prompt and add some chat history when going for a more chat-like implementation with recurring interaction.

You may also start experimenting with other models, ollama.com/library lists all available models and their specific use cases. Be aware that the larger the model is, the more amount of RAM will be used on your development machine. A good rule of the thumb is that you need twice the size of the model + 500MB available as RAM.

Conclusion

Setting up a project that uses Generative AI locally is easy using the new Microsoft.Extensions.AI packages. This blog just gave an example of a very simple communication, but if you dive in the Microsoft Learn pages and read more about this extension package you will soon explore more possible use cases.

And there is much more: by using the Microsoft libraries AutoGen and Semantic Kernel the possibilities seem endless when you start with Multi Agent Systems (Agentic AI) but that’s for another series of blogs…