How Infrastructure as Code empowers developers

Managing infrastructure has been a challenge for a long time. Traditionally, it involved a large amount of manually entered commands, mouse clicks and other human-driven labor.

As more and more companies work with large amounts of servers and duplicated infrastructure across environments, we’re running into the limits of this approach. This manual way of working has multiple problems. It is error-prone, as performing actions in a slightly different way can result in large differences. It can be slow, especially when we want multiple copies of each server. Plus, the approach relies on very accurate documentation of the state of each piece of infrastructure, server and procedure. In many cases, this documentation is not kept up-to-date as well as it should be, resulting in problems during development or production.

Infrastructure as Code is all about applying modern practices of software development to the management of your IT infrastructure. It empowers you to do your job as easily as possible. How? By making use of the best aspect of working with computers: automation. It enables consistent rollouts of your software, frequent updates of your servers and it frees up your developers for actual improvements instead of just keeping the existing servers afloat.

This post is intended as an introduction to the concept of Infrastructure as Code. While there are some examples, we will not explain how to write it. We will show various types of Infrastructure as Code that are in active use, and show the ways of working they enable.

Traditional server configuration

Let’s start by looking at a very important component of our infrastructure: a server.

In general, a server is just a computer that we run somewhere in a closet or similar to do some serious things. So not your personal laptop or your gaming computer at home, but the ones serving websites, steam downloads, Discord voice calls and so on. Similar to your personal laptop, you install some programs on it when you get it, install updates over time, and add on other programs you want.

Sooner or later, you need to reinstall it – either it’s getting too slow, it has malware, or you simply need a backup server. For a PC, it does not matter much if this 2nd installation looks slightly different. But for professional servers, that can lead to nasty surprises. Websites that behave slightly differently, crashes that you had solved on the old installation by changing some obscure configuration. By starting from scratch, we risk forgetting important details.

This means that we need to keep extensive documentation on its installation. Something most people do not like doing. But it’s essential to have consistent installs, and to make a system that others can work with as well.

So we need to find a way to make our setup reproducible, to make it scale.

Repetition, repetition, repetition.

Let’s imagine the simplest way we could automate this.

You’ve gone through the process of setting up a server once. By keeping careful step-by-step documentation, you described exactly what commands you had to enter to set this server up. Well, then we’re already very close to a shell script, aren’t we?

echo'Running setup script...'# Install the serversudo yum install mysql-server# Copy the config file if it does not yet existif[!-f /etc/my.cnf ];thencp /mnt/usbdrive/my.cnf /etc/my.cnffisystemctl enable mysqldEvery major computer OS has at least one language that you can use out-of-the-box for automation. Maybe it’s Bash, maybe it’s Powershell, that depends on your operating system of choice. But all of them boil down to being a list of commands that get executed when you run the script.

But of course, we later realize we need some changes in the setup of this server.

It’s easy to change the script so a next server looks differently. But you’d prefer to have one central script to execute both for existing servers, that need to be changed, and for new ones. You could solve this by creating a sequential list of scripts. Each one changes the “output” of the last, until we arrive at one central server.

$ ls -lh ./install-scripts-rw-r--r-- 1 34K Jan 06 16:30 setup-1.sh-rw-r--r-- 1 187B Mar 20 16:30 setup-2.sh-rw-r--r-- 1 20B May 31 16:30 setup-3.shWe could go ahead and make a central script that runs all of them. Then we would need to keep track of what the last executed script was on this server. So now we need to keep our own state… This will quickly get tricky.

But! There’s a better way.

----name: Install Mysql packageyum:name: mysql-serverstate: installed-name: Create Mysql configuration filetemplate:src: my.cnf.j2dest: /etc/my.cnf-name: Start Mysql Serviceservice:name: mysqldstate: startedenabled:yesThis is an example of Ansible, one of many Desired State Configuration tools. The core idea behind desired state configuration like this is that you state what you want the end result to be, not how it should be achieved. The tool, Ansible in this case, will check the current situation, and run the correct commands.

Tools like Ansible even have ways to encapsulate common classes of behaviour in “classes” or “roles”. So you can say that a server is “monitored by tool A”, and “runs Docker images”, and Ansible will apply the correct sets of instructions for both. This means we get to apply some concepts we like to use in programming, like composition, in our infrastructure management!

After converting our commands to desired state in Ansible, we have something we can easily put in version control. Effectively, we’ve converted the configuration of our server to code.

Deleting everything. Constantly.

Imagine not having to keep a server up and healthy as long as possible. Using modern technologies, it’s easy to build your system out of virtual servers that only exist until they need to be changed next. We can create images of a server we’ve created once, then deploy it as often as we want. If there updates to the operating system or our application, we create a new image, and deploy that.

So we throw away server whenever something needs to change.

What does this look like in practice?

One way is to manually create these images. You can manually boot up a fresh Linux install, go through the motions to set everything up you need except for your application code itself. So you add Nginx, Mysql, a monitoring agent. Then, you create a snapshot of that OS, create servers from it, and all you need to do after that is put your application on it – and done! This is much faster than going through the motion to install all packages from the internet each time, because you effectively only have a single compressed archive you’re unpacking – the image.

But we’ve seen that scripting these steps is easier. There’s a very big player in the market that boils down to doing this: Docker Containers.

# syntax=docker/dockerfile:1FROM node:12-alpineRUNapk add --no-cache python2 g++ makeWORKDIR /appCOPY . .RUNyarn install --productionCMD ["node", "src/index.js"]EXPOSE 3000We write something that vaguely resembles our shell scripts, and after running it, we get an image that we can deploy as often as we want. It’s made to be easy to automate, and you can include almost any setup step in these Dockerfiles.

Where we might have been proud of servers with half a year of uptime, nowadays, virtual machines that don’t live longer than a day are not uncommon at all. The main reason for that is not because we hate long uptimes. The real reason these have started existing is to make it possible to scale up rapidly. Being able to create servers in seconds and delete them when you don’t need them anymore makes it possible to pay exactly for what you’re using, or to run very large jobs every night without paying thousands of dollars a month to keep the servers running constantly.

We call these immutable servers, and the concept in general immutable infrastructure. The alternative we saw before is called mutable infrastructure. Infrastructure as Code is a big enabler of this immutability, and for many solutions, it is easier to maintain than systems we keep tweaking over a period of months or years.

Infrastructure as Code

So far, we’ve only talked about servers. Infrastructure is, of course, a lot more than just this. We also have networking, storage, the underlying hardware of the server, and so on.

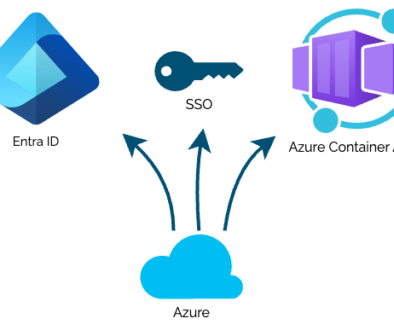

In practice, any component that is virtual (as opposed to, say, a physical you have to plug in by hand) can be automated, and all the major Cloud providers have virtualized almost anything you can think of. And it’s here, in the cloud, that Infrastructure as Code – true infrastructure, not just servers – truly starts to shine.

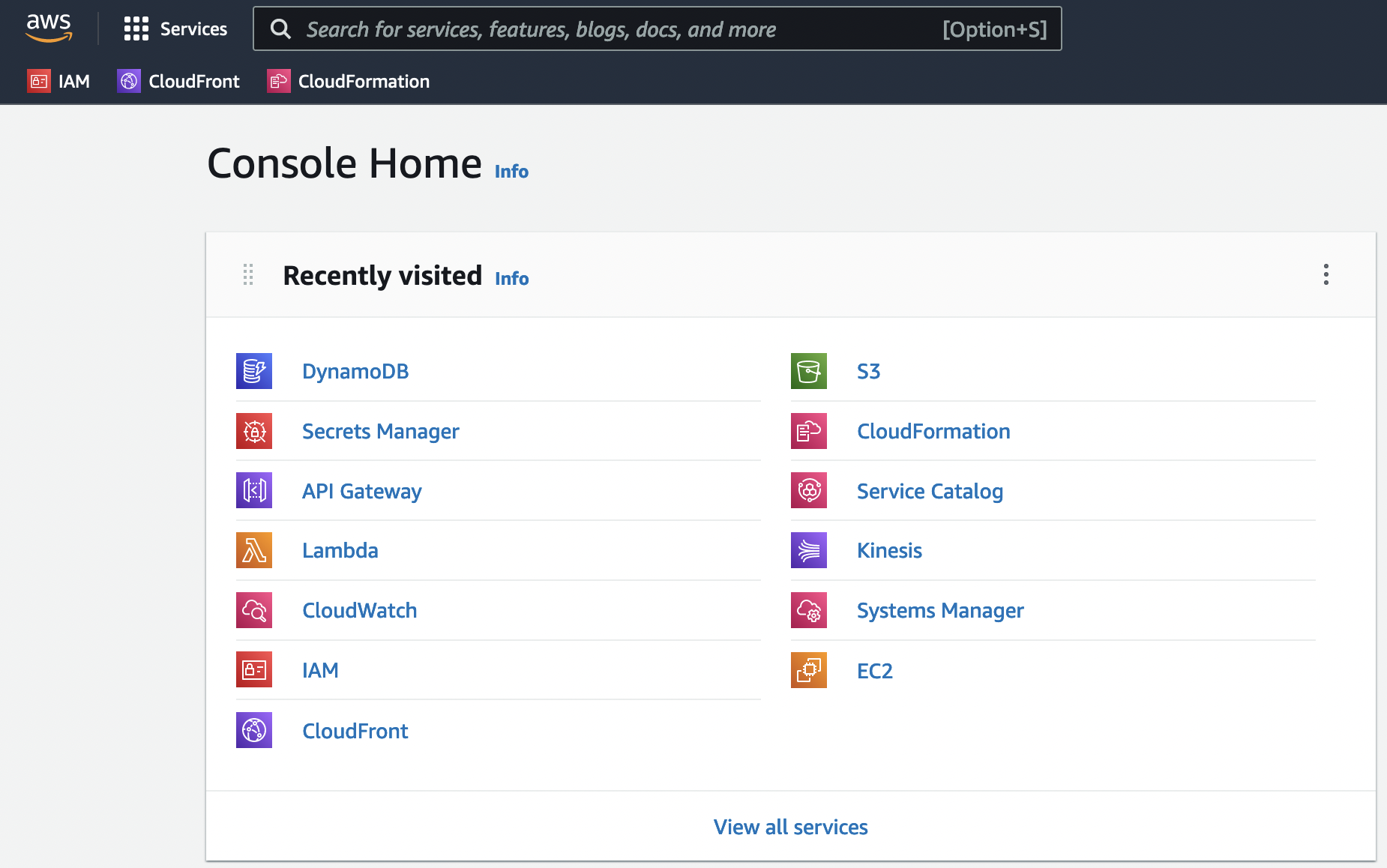

When you first start out working with a cloud provider, you might log on to their web console, launch a virtual machine or two, create a managed databases and connecting them. But then, you need to do it again for your production environment, and you have to make the exact same clicks so the environments are similar enough.

But you can automate this.

Cloud providers have done something awesome: they created and published API’s with which you can do nearly anything you can do manually in their web consoles.

One approach would be to write another shell script. This time, it runs a series of HTTPS calls to your cloud provider. But: we’ve learnt about the power of desired state configuration. So we know there is something better.

Resources:StaticFilesBucket:Type: AWS::S3::BucketServerlessWebHandler:Type: AWS::Lambda::FunctionProperties:Runtime: python3.9CodeUri: s3://my-example-bucket/serverless-web-handler/v1.zipEnvironment:STATIC_FILES_BUCKET: !Ref StaticFilesBucketThis is Cloudformation. It’s the official language of Amazon Webservices, to define our cloud infrastructure as desired state.

We can put this in a GitHub repository and automatically deploy it to the correct AWS account – test or production – at the push of a button. You change your code, automated checks verify it, someone looks if it’s functionally, you merge it. You run your CI/CD pipeline, and minutes later your AWS account is up-to-date with the new environment. It created virtualized, managed databases, message queues, networks and servers based on a bit of configuration. And after testing it, you click another button, and it replaces your production environment in-place, without downtime.

So there you have it: we can now create complete, complicated, multi-tier application infrastructure as YAML.

Infrastructure as real Code

If you’re like me, you might feel a bit deceived about hearing “code” and seeing YAML. The good news is, we have much better alternatives nowadays. For most Cloud Providers, there are development kits that allow you to write normal code – like Python, Java or C# – and generate your infrastructure from that!

from aws_cdk import Stack, aws_lambda, aws_s3from constructs import Constructclass MyExampleStack(Stack):def__init__(self, scope: Construct, construct_id: str, **kwargs): static_files_bucket = aws_s3.Bucket(self,id="StaticFilesBucket", ) serverless_web_handler = aws_lambda.Function(self,id="ServerlessWebHandler", runtime=aws_lambda.Runtime.PYTHON_3_9, code=aws_lambda.Code.fromAsset('./functions/web-handler/'), environment={ "STATIC_FILES_BUCKET": static_files_bucket.bucketName } ) static_files_bucket.grantRead(serverless_web_handler)What you see here is CDK: the AWS Cloud Development Kit. CDK is a collection of libraries and tools you can use to create cloud infrastructures. It’s available in multiple languages – so far in Javascript, Typescript, Python, Java, C# and Go.

It can generate all the same infrastructure you can with CloudFormation. But instead of plain old YAML, you can use a real programming language.

YAML has some distinct disadvantages compared to a real programming language. The lack of custom functions, for/while loops, easily reusable libraries and much more. By putting our infrastructure definition in actual code, the intent of our definition is much more readable.

By “synthesizing”, or running, a CDK project, the underlying CloudFormation will be generated. In that way, the step from CloudFormation to CDK feels similar to other steps forward we’ve made from low-level programming languages to high-level ones! But in this case, we still often run into the “Assembly” variant of the code: CloudFormation. That makes it a good idea to check the CloudFormation that is generated by your CDK code.

At the time of writing, we highly recommend anyone getting started with Infrastructure as Code on AWS to try out CDK.

The power of the automated cloud

So far, we’ve seen a few steps of automation. From shell scripts, to desired state configuration of long-living servers, to immutable servers, and on to complete immutable infrastructures defined as desired state. But you may still be wondering – what’s all the fuss about?

So let’s take a little more time to envision something that becomes possible with the automated cloud.

Imagine a company running a couple of webservices that are used by customers to send large sets of data and to retrieve analyzed results. They’re already running these in Docker containers – which means that, because creating the “server” these services run on is automated, we can easily recreate these in various environments.

We create an AWS account for each environment – test, acceptance and production – and spin up each service in each environment. Changing the “size” of each underlying resource, such as CPU, memory and storage, is a matter of passing along different parameters in a few fields of our Infrastructure as Code. That makes it incredibly easy to have otherwise identical services in each account, with differing amount of compute resources. This makes it easy to save money in the test environment. One step further would be to use serverless services, where we only pay for what we use – but that’s a different topic!

The services can place the submitted datasets in a managed storage location for processing.

Next, we have a few worker processes that pick up this data and generate the information our customers want from us. Thanks to the ease with which we can automatically create and delete servers, we actually scale the number of workers up and down depending on the amount of work to be done. This means we can run cheap when it’s calm, and still work very fast during the busier days.

We now have an autoscaling environment with barely any maintenance to the underlying system. Thanks to the automated cloud. But it gets better.

We can create an AWS account for each developer, which means they can all work completely isolated from each other when they want. And after each merge to our integration branch – after a developer finished a bug or feature – we can automatically test the code in a real environment. We deploy the entire stack to another AWS account, and run automated tests against it as if we were our customers. After finishing up the test run, we delete all the resources again, and barely spent any money for a true end-to-end test.

All the while, the developers were focused on what they enjoy doing – building things – instead of keeping the systems running, tweaking the configuration of our message queues, adding and removing storage, etcetera. Setups like these have been possible for a long time. But we’ve only recently arrived at a point where it’s become feasible for companies of any size to implement this, without spending much more money on resources or extra developers.

And all of this is not hypothetical. We’ve seen and helped our own customers create setups just like this. This is actually used – a lot – in production, and while it will take some getting used to, it will speed up your development enormously. Good Infrastructure as Code empowers you, as a developer, and as a company.